Build a Local LLM-based RAG System for Your Personal Documents - Part 1

Learn how to build your own privacy-friendly RAG system to manage personal documents with ease.

Hey there, are you ready to transform your mundane private/personal document management into a high-tech, AI-powered system that you control entirely on your local machine? No cloud, no privacy concerns, just you and your documents. Trust me—it’s cooler than it sounds. Yes REALLYYY! 😎

Today, we’re diving into the world of LLMs and Retrieval-Augmented Generation (RAG) systems, but with a twist—it’s all about doing it locally and privately, like a boss.

The Backstory: Why Build a Private RAG System?

If you live in Germany, you already know the paper struggle is REAL. Between utility bills, bank letters, and tax documents, it feels like we get a mountain of mail every week. The problem? These documents quickly pile up, and finding that one piece of information when you need it can be like searching for a needle in a haystack.

And sure, while OpenAI’s ChatGPT might seem like the obvious solution for a quick search, let’s be real—privacy matters. I am sure like us you would be hesitant to upload your sensitive document on ChatGPT. That’s where the inspiration for this project came from: building a personal, private RAG (Retrieval-Augmented Generation) system on my own machine, with full control and privacy.

By converting all these letters and documents into PDFs and uploading them to this local RAG system, we can now easily find the information we need. No more endless searches through piles of paper. Plus, it’s free—and who doesn’t love that? You get to chat with a local LLM (Large Language Model) without worrying about data privacy. Sounds pretty awesome, right?

Here’s How It Looks!

A small demo:

You really thought we would display private information as part of the demo? 😅

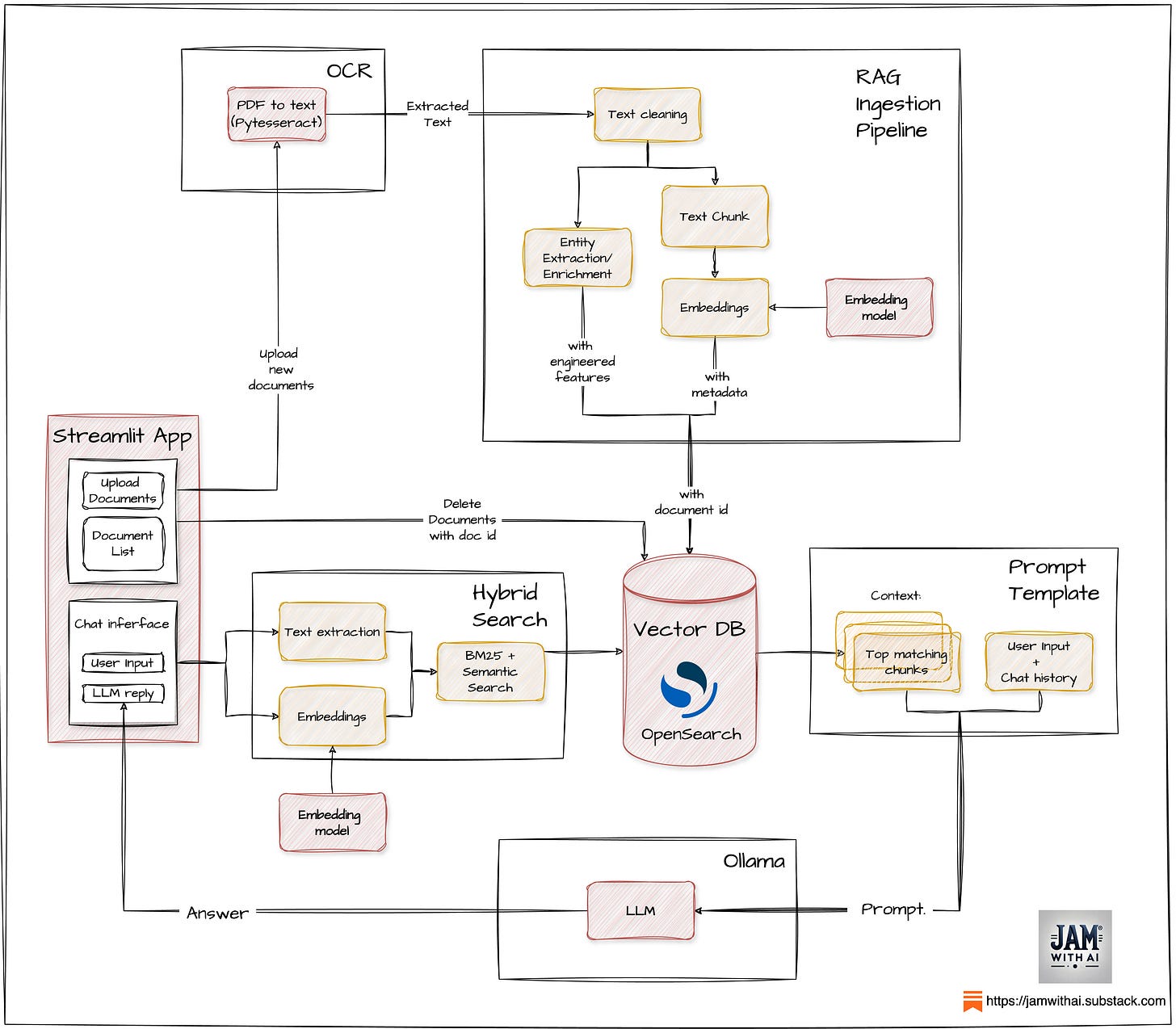

The Highlevel RAG system design 🛠️

Streamlit App

This is where it all starts. Upload your PDF files using a simple, intuitive UI. Whether it’s contracts, bills, or letters, the app takes care of all the interaction without any fuss. You drag, drop, and voilà—your documents are now ready for processing.

OCR and Ingestion pipeline

Next comes PyTesseract—our tool for converting scanned PDFs into text. Once the text is extracted, it’s divided into manageable chunks and passed through SentenceTransformers to generate embeddings. For this, we’re using german based model - mixedbread-ai/deepset-mxbai-embed-de-large-v1 from HuggingFace.

Indexing with OpenSearch

The extracted text, embeddings, and additional features are stored in OpenSearch index, which makes document retrieval really fast. We’ve set up a hybrid search pipeline that uses both the text and embeddings to find exactly the right documents when you ask a question. Tp setup Hybrid Search we configure a search pipeline with a normalization-processor for OpenSearch. for smooth, hybrid retrieval. (Bonus: all of this runs locally on Docker for total privacy!)

Hybrid Search

When you ask a question, the system converts it into embeddings and queries OpenSearch with embeddings as well as user text using Hybrid search. It retrieves the top 5 chunks of text that are most relevant to your query and passes them on to the LLM, which is powered by Ollama. Ollama is running locally too, so no cloud worries!

Prompt template and Ollama

Finally, with the retrieved chunks act as context for the LLM and with the designed prompt the LLM provides an answer to your question without having to go through loads of documents. Voila! 🎉 That’s it!

Building your own local RAG system isn’t just a fun side project—it’s genuinely helpful for managing private documents while keeping everything secure and offline. With tools like OpenSearch, Ollama, PyTesseract, and Sentence Transformers, you can create your very own AI assistant that respects your privacy. 🙌

Part 2: Diving into the Code (Coming Soon!)

In Part 2, we’ll walk through the actual complete code to set up this system on your local machine. We’ll cover how to:

Run and configure Hybrid OpenSearch locally using Docker

Integrate PyTesseract to handle scanned documents.

Setup text chunking and embedding generation

Build a Streamlit UI for uploading documents and chatting with the LLM.

Setup Retrieval cycle

If you’re excited about building your own local RAG system and want to dive into the code, stay tuned for the next post where we’ll walk through it all step-by-step. We’ll cover everything from setting up Docker with OpenSearch to building the Streamlit UI and integrating PyTesseract

Don’t miss out—subscribe to Jam With AI for updates and to support our work! 🤘

And hey, if you found this post useful or know someone who might benefit from building their own private RAG system, feel free to share it with your friends and community. Let’s spread the AI magic together by sharing this blog!

Danke Schön! Leaving you with a funny video that takes you through our journey of building this system in a day! ENJOYYY!!! (with music please!) 😂

you can check my post and create a mutual collaboration if it alligned your goal

Good Article. Which methodologies do you use to ground your RAG? 🤔