Bringing Your RAG System to Life - The Data Pipeline

Mother of AI Project, Phase 1: Week 2

Hey there! 👋

Week 1 was all about infrastructure first, AI second. We built the foundation - PostgreSQL, OpenSearch ready, Airflow dashboard looking great, Docker orchestration working like magic.

Missed Week 1? Catch up:

But here's the thing: our database had exactly zero rows.

Beautiful infrastructure. Perfect setup. Completely empty.

Sound familiar? This is the moment every AI engineer faces when they move beyond tutorials into the real world. You've got the pipes, but no water flowing through them.

This week, we changed that!

The Uncomfortable Truth About Data Pipelines

Let us be brutally honest: Most RAG tutorials are basically fraud.

They give you a nice, clean CSV/PDF file and say "now build RAG!" But in production? You're staring at:

APIs with rate limits that will ban you faster than you can think

PDFs that seem designed to break every parser known to humanity

Network timeouts, corrupt downloads, and the soul-crushing realization that data engineering is where dreams go to die

Data pipelines aren't sexy. They don't make for impressive demos. You can't screenshot a rate-limiting algorithm or put async error handling in your portfolio.

This isn't a bug - it's a feature. Data engineering IS AI engineering. The algorithms are just the cherry on top of a massive infrastructure sundae.

The Foundation Everything Else Depends On

Every successful AI system you've ever used - search engines, recommendation systems, language models - has industrial-strength data pipelines at its core.

Google's magic? Crawling and processing the entire web, reliably, every day

Netflix recommendations? Ingesting viewing data from millions of users in real-time

ChatGPT's intelligence? Processing terabytes of text data with perfect quality

The algorithms get the glory. The data pipelines do the work!

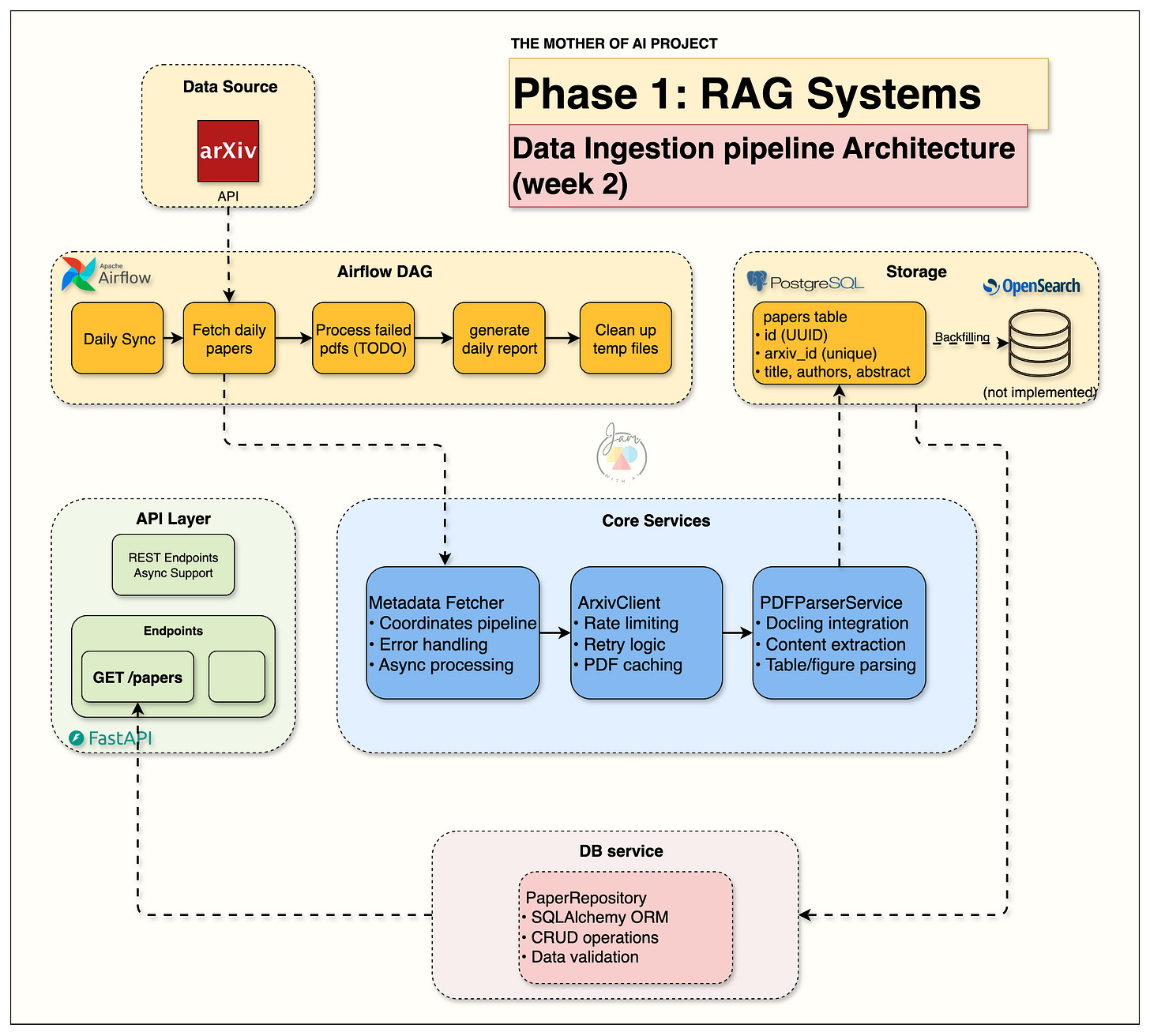

What We Built:

A Data Pipeline That Actually Works

After a week of pain, debugging, and learning, we transformed our beautiful-but-empty infrastructure into something magical: a self-sustaining research paper ingestion machine.

The Transformation

Before Week 2:

Beautiful infrastructure

Zero papers in database

Manual everything

Dreams of automation

After Week 2:

Automated arXiv Integration → Daily CS.AI paper fetching with bulletproof rate limiting

Scientific PDF Processing → Docling-powered extraction that actually understands academic papers

Production Airflow Workflows → Robust pipelines with retry logic and comprehensive error handling

Living Database → Rich, structured paper content growing automatically every day

The result?

Our system now wakes up every morning at 6 AM UTC, fetches the latest AI research papers, processes them, and adds structured content to our database.

Without any human intervention.

Zero to 100+ papers daily. Completely automated. Actual production grade!

This is what separates real systems from demo code.

The Architecture That Makes It Work

The Service Layer That Changes Everything

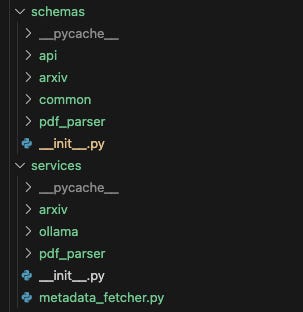

Look at what we added to our codebase:

src/services/

├── arxiv/ # Smart arXiv API integration

│ ├── client.py # Rate-limited, retry-aware client

│ └── factory.py # Clean service instantiation

├── pdf_parser/ # Scientific PDF processing

│ ├── docling.py # Docling integration for academic papers

│ ├── parser.py # Abstract interface

│ └── factory.py # Parser factory pattern

└── metadata_fetcher.py # The orchestrator that ties together

This isn't just "call an API and save to database." This is production-grade service architecture with:

Factory patterns for clean dependency injection

Abstract interfaces for swappable implementations

Async processing for concurrent operations

Comprehensive error handling for real-world reliability

Why This Architecture Matters

Testable: Each service has clear interfaces and responsibilities

Scalable: Async processing handles concurrent operations elegantly

Maintainable: Factory patterns make swapping implementations trivial

Observable: Comprehensive logging and error handling throughout

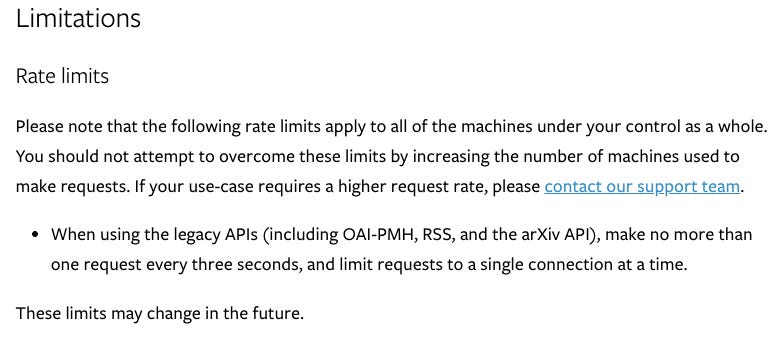

Lesson 1: The rate limit with arXiv API

We're joking - this didn't happen to us. But this exact scenario happens to most engineers building their first data pipeline.

The pattern is always the same: Script works fine for a few requests → confidence builds → scale it up → 💥 BANNED 💥

arXiv isn't some random startup API you can hammer. It's a global scholarly repository serving researchers worldwide. They have rules: 3-second delays between requests, period.

Break them, and you're not just rate-limited, you're potentially disrupting access for scientists everywhere.

Lesson learned: Respect the services you depend on.

The arXiv Rules Are Non-Negotiable

The guidelines are crystal clear:

3-second delays between requests

Not 2.9 seconds

Not "usually 3 seconds"

EXACTLY 3 seconds

This isn't politeness - it's how you build systems that run for months without breaking. Our ArxivClient became a masterclass in respectful engineering:

✅ Request timestamp tracking (we know exactly when we last hit the API)

✅ Intelligent delays (if it's been 2.1 seconds, we wait 0.9 more)

✅ Exponential backoff (graceful handling when things go wrong)

✅ Smart caching (never download the same PDF twice)

Lesson 2: Scientific PDF Processing 📄💀

The reality: Scientific PDFs break every standard parser you've ever used.

Academic papers aren't just "text in PDFs", they're structured documents with mathematical notation, multi-column layouts, complex tables, and reference formatting that carries semantic meaning.

We tried PyPDF2, pdfplumber, and others. The results? Mathematical equations became gibberish, tables exploded into word clouds, and searching for "attention mechanisms" returned "ttention mchnsm" because characters randomly vanished.

Bad data quality doesn't just slow you down - it completely breaks your system.

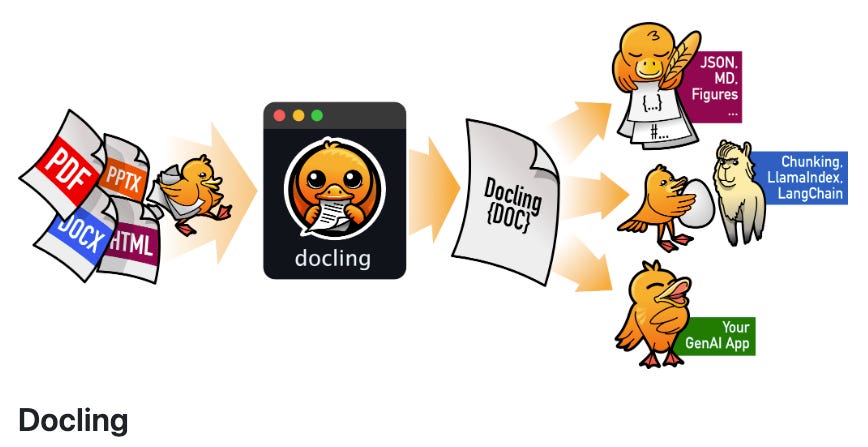

Why We Chose Docling

After testing multiple PDF libraries, Docling stood out for production use:

✅ Consistent output → Same PDF, same parse, no surprises

✅ Scientific document understanding → Preserves structure, not just text

✅ Structured metadata → Separate section headers, tables, figures, references

✅ Production-ready → Easy Python package, clean tabular output

✅ Advanced features → OCR support, VLM integration for figures/charts

✅ Extendable → Works with other formats (HTML, DOCX), supports chunking

Future potential: Docling's VLM capabilities for figure understanding and chart recognition open up exciting possibilities for multimodal RAG systems.

Result? Our search can now find papers by table contents, figure descriptions, or specific equations. That's not just better extraction - that's intelligent document understanding.

There are so many features Docling offers including use of OCR and VLMs

Always evaluate tools with a long-term horizon. Will this adapt with your evolving use case?

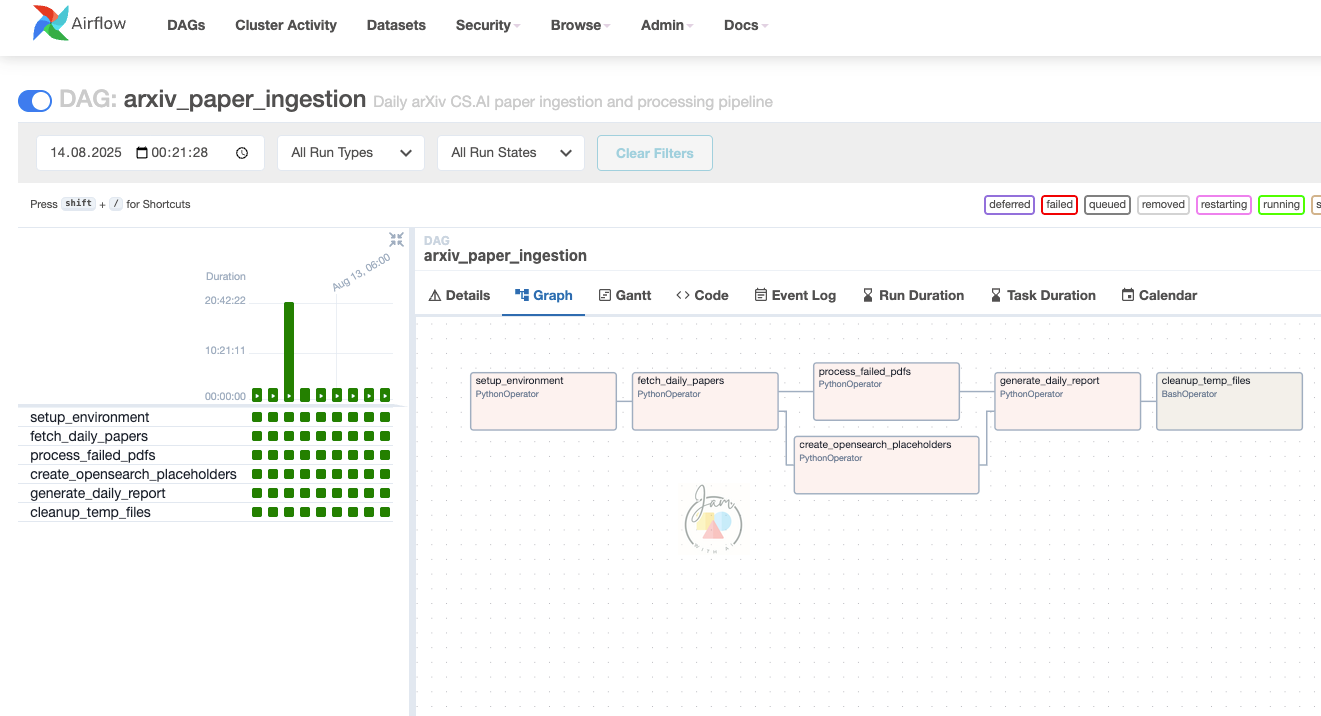

Production Airflow: Beyond Hello World

Week 1 gave us Airflow running. Week 2 gave us real production workflows.

The Production DAG Architecture

Our arxiv_paper_ingestion DAG isn't a toy, it's enterprise-grade automation:

# Daily at 6 AM UTC - when arXiv publishes new papers

schedule="0 6 * * *"

# Production error handling

retries=2

retry_delay=timedelta(minutes=30)

# Prevent concurrent runs

max_active_runs=1

The Four-Stage Pipeline

Setup Environment: Verify all services and initialize caching

Fetch Daily Papers: Get yesterday's papers with proper rate limiting

Process Failed PDFs: Retry any parsing failures with different strategies

Generate Daily Report: Comprehensive statistics and monitoring

What makes this production-ready?

Error isolation: Individual paper failures don't break the entire pipeline

Retry logic: Intelligent handling of transient failures

Monitoring: Detailed logs and success metrics

Resource management: Controlled concurrency to avoid overwhelming systems

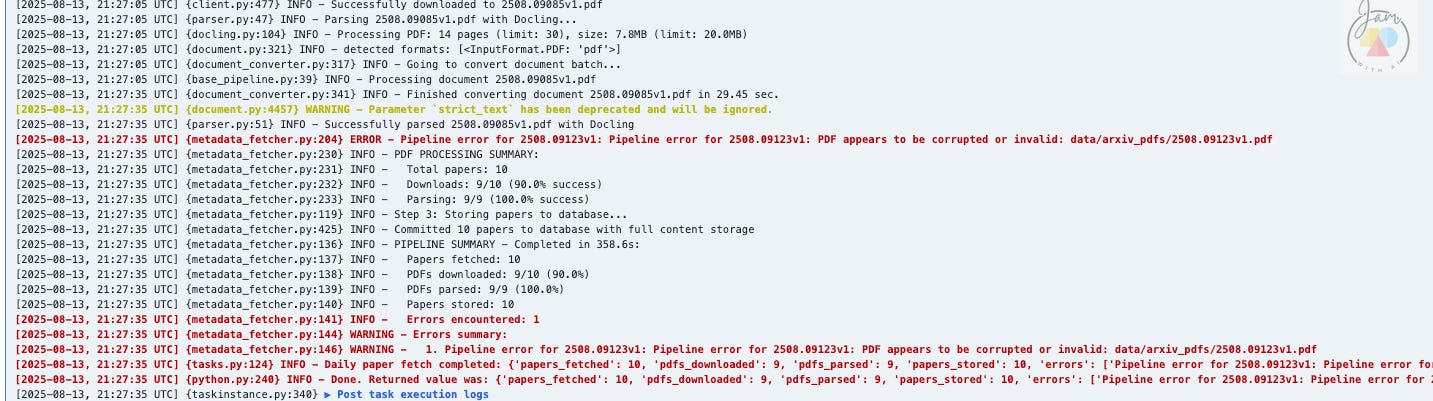

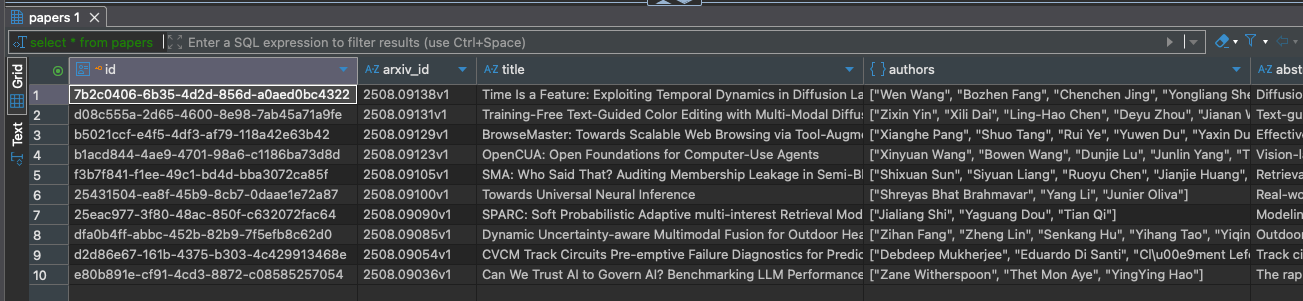

The Results: From Zero to Rich Content

After running our Week 2 pipeline, here's what our database looks like:

Rich, Structured Academic Content

Complete Metadata: Title, authors, abstract, categories, publication date

Full-Text Content: Parsed PDF content ready for search and retrieval

Structured Storage: Normalized database schema optimized for querying

Daily Updates: Fresh papers automatically ingested every morning

Production Performance Metrics

10-100+ papers per day: Configurable based on your needs

90%+ success rate: Robust error handling means high reliability

2-3 minute processing time: Docling is slow but really good (also high concurrency leads to high CPU usage)

Cross-platform reliability: Works on macOS, Linux, WSL, Ubuntu

The Hard-Won Lessons:

What Two Weeks of Reality Taught Us

Building this system taught us lessons you can't learn from tutorials. Here are the insights that will save you weeks of debugging:

1. Data Engineering Is 80% of AI Engineering

You might think that data engineering was the boring stuff we did before the real AI work. No:

In our RAG system:

80% of the complexity: Getting reliable data into the system

15% of the complexity: Making that data searchable and retrievable

5% of the complexity: The actual LLM generation everyone obsesses over

The ratio might actually be 90/10. Good data engineering is force multiplication for everything else.

2. Error Handling Is Half Your Code

Here's something they don't teach in bootcamps: in production systems, error handling often comprises more lines of code than the happy path.

Our arXiv client has:

20 lines for the basic API call

80 lines for rate limiting, retries, timeout handling, and graceful degradation

That's not bloat - that's the difference between a script that works once and a system that works for months.

3. Choose Domain-Specific Tools

We learned there's a hierarchy to tool selection:

Level 1: "This tool exists and does the thing we need"

Level 2: "This tool is reliable and well-maintained"

Level 3: "This tool is designed for our specific domain"

Most people stop at Level 1. We learned to prioritize Level 3, and it made all the difference. Docling isn't just a PDF parser - it's also super good scientific document understanding engine. That specificity matters.

4. Configuration Management Is Critical

There's a moment in every production system where you realize you need configuration management. Not for deployment, but for survival.

Our system has configurable:

Rate limiting delays

Retry counts and backoff strategies

Concurrency limits

Timeout thresholds

PDF cache locations

Database connection pools

This isn't over-engineering - it's what lets you tune the system for different environments without changing code.

5. Documentation Helps You Debug

We started writing documentation to help others use the system. We ended up using it to debug our own code.

When you're forced to explain how your rate limiting works, you discover the edge cases you missed. When you document your error handling strategy, you realize where the gaps are.

Good documentation isn't just communication - it's thinking made visible.

The Professional Difference: Engineering Discipline

Here's what separates weekend projects from production systems: engineering discipline.

What We Built vs. What Most People Build

Most RAG tutorials:

Single Python script that breaks if anything goes wrong

Manual data loading from CSVs

No error handling or monitoring

Works on developer's laptop, fails everywhere else

What we built:

Modular service architecture with clear interfaces

Automated data pipelines with comprehensive error handling

Production workflows with monitoring and retry logic

Cross-platform reliability that works in any environment

The Compound Effect

Every production decision we made in Week 2 will pay dividends in Week 3+. Clean architecture, robust error handling, and proper service boundaries make everything else easier.

Try It Yourself: Week 2 Implementation

Want to build your own production data pipeline? Here's how:

Quick Start

# Start the complete system

docker compose up --build -d

# Run the Week 2 interactive notebook

uv run jupyter notebook notebooks/week2/week2_arxiv_integration.ipynb

# Trigger the production pipeline

# Visit http://localhost:8080 and enable arxiv_paper_ingestion DAG

What You'll Master

Production API integration with rate limiting and error handling

Scientific document processing using specialized tools

Async Python patterns for concurrent processing

Airflow orchestration for reliable automation

Service architecture for maintainable systems

Resources and Next Steps

Week 2 Materials

Interactive Guide: Week 2 Notebook

Week 2 code: Github Code

Learn More

Week 1 Foundation: The Infrastructure That Powers RAG Systems

arXiv API: Official Documentation

Docling: Scientific PDF Processing

What's Coming in Week 3:

Making Data Discoverable

Week 2 gave us rich content. Week 3 will make it searchable and discoverable:

The Week 3 Goal: Hybrid Search

OpenSearch Integration: Real BM25 search replacing placeholder endpoints

Document Indexing: Structured content indexing for fast retrieval

Search API: REST endpoints for paper discovery and filtering

Query Processing: Advanced search with category and date filtering

The RAG Foundation is Taking Shape

✅ Infrastructure (Week 1): Rock-solid service foundation

✅ Data Pipeline (Week 2): Fresh academic content daily

🔄 Search Engine (Week 3): Making content discoverable

🔄 RAG Pipeline (Week 4+): Complete retrieval-augmented generation

Our foundation is now rock solid. Every day, 10-100 research papers flow into our system - parsed, structured, ready for intelligent querying. That's the compound interest of good engineering.

This is how you build AI systems that matter: one bulletproof component at a time, with respect for complexity and humility about what you don't know yet.

Follow Along: This is Week 2 of 6 in our Zero to RAG series.

Every Thursday, we release new content, code, and notebooks.

The data is flowing. The foundation is set.

Next week, we make it searchable. 💪

Subscribe to not miss the journey from infrastructure to production RAG system.

Amazing post, <3

Learn a lot