The Complete RAG System

Mother of AI Project, Phase 1: Week 5

Hey there 👋,

Welcome to lesson five of "The Mother of AI" - Zero to RAG series!

Quick recap:

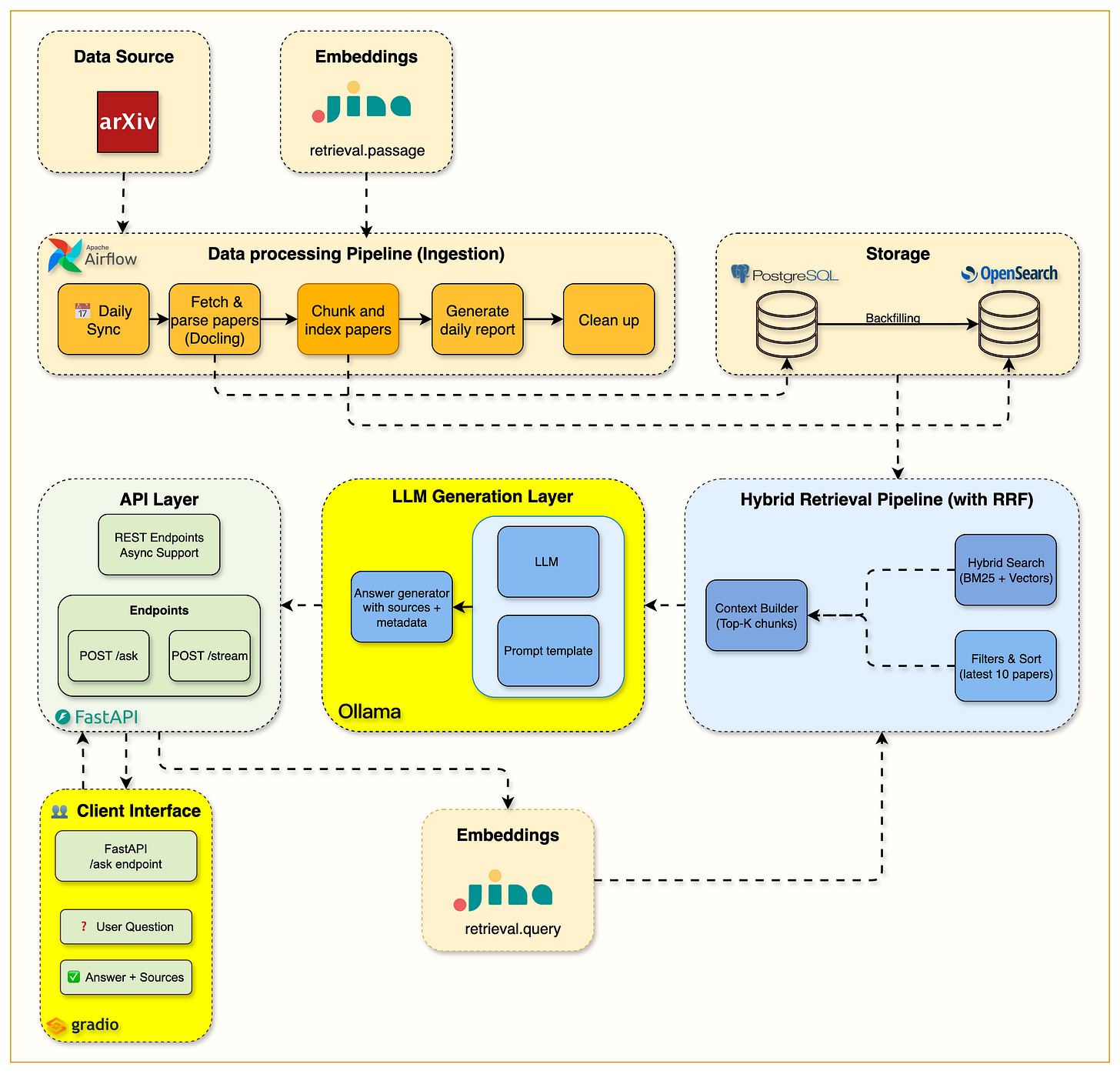

A strong RAG system is a chain: infra → data → search → generation.

Weeks 1–4 gave us solid infrastructure, live data pipeline, BM25 keyword search, and hybrid retrieval with semantic understanding. Now we complete the loop with LLM integration that delivers production-grade performance.

Most teams integrate LLMs and call it done. We won't. We'll implement smart prompt optimization, streaming responses for better UX, and a production-ready interface that actually works.

This week's goals

Integrate Ollama for local LLM inference with llama3.2 models

Optimize prompts with smart context reduction strategies

Implement streaming with Server-Sent Events for real-time responses

Build Gradio interface for interactive RAG system testing

Create production API with two focused endpoints for different use cases

Deliverables

Ollama service integration with automatic model management and health checks

Optimized prompt templates with minimal context for efficiency

Dual API design supporting both complete responses and streaming

Gradio web interface with real-time streaming and source citations

Production configuration with environment-based settings and error handling

Big picture: We took the hybrid search from Week 4 and connected it to local LLM inference, creating a complete RAG pipeline.

The key insight: removing redundant metadata and limiting response length delivers cleaner prompts without sacrificing answer quality.

The architecture now includes a complete generation layer: hybrid search retrieves relevant chunks, minimal prompts preserve context window, and Ollama generates focused answers with source citations.

What we built (high level)

Complete RAG system with LLM generation layer (Ollama), hybrid retrieval pipeline, and Gradio interface

LLM Generation Layer: Ollama service running llama3.2 models locally for privacy and control

Optimized Prompt Pipeline: Context builder selecting top-K chunks with minimal overhead

Streaming Architecture: Real-time token generation via Server-Sent Events

Interactive Interface: Gradio app for testing queries with live streaming

Production API: Two endpoints optimized for different use cases

What goes into the LLM

We send minimal, focused chunks to the LLM:

# Each chunk contains only what's necessary

chunk = {

"arxiv_id": "1706.03762", # For citations

"chunk_text": "The actual relevant content from the paper..."

}This could be extended or updated as per use case :)

The LLM receives:

The user's question

Top-K relevant chunks (typically 3)

A clear system prompt with instructions

No redundant metadata or unnecessary information

This clean approach ensures the LLM focuses on answering the question using the provided content rather than processing irrelevant metadata.

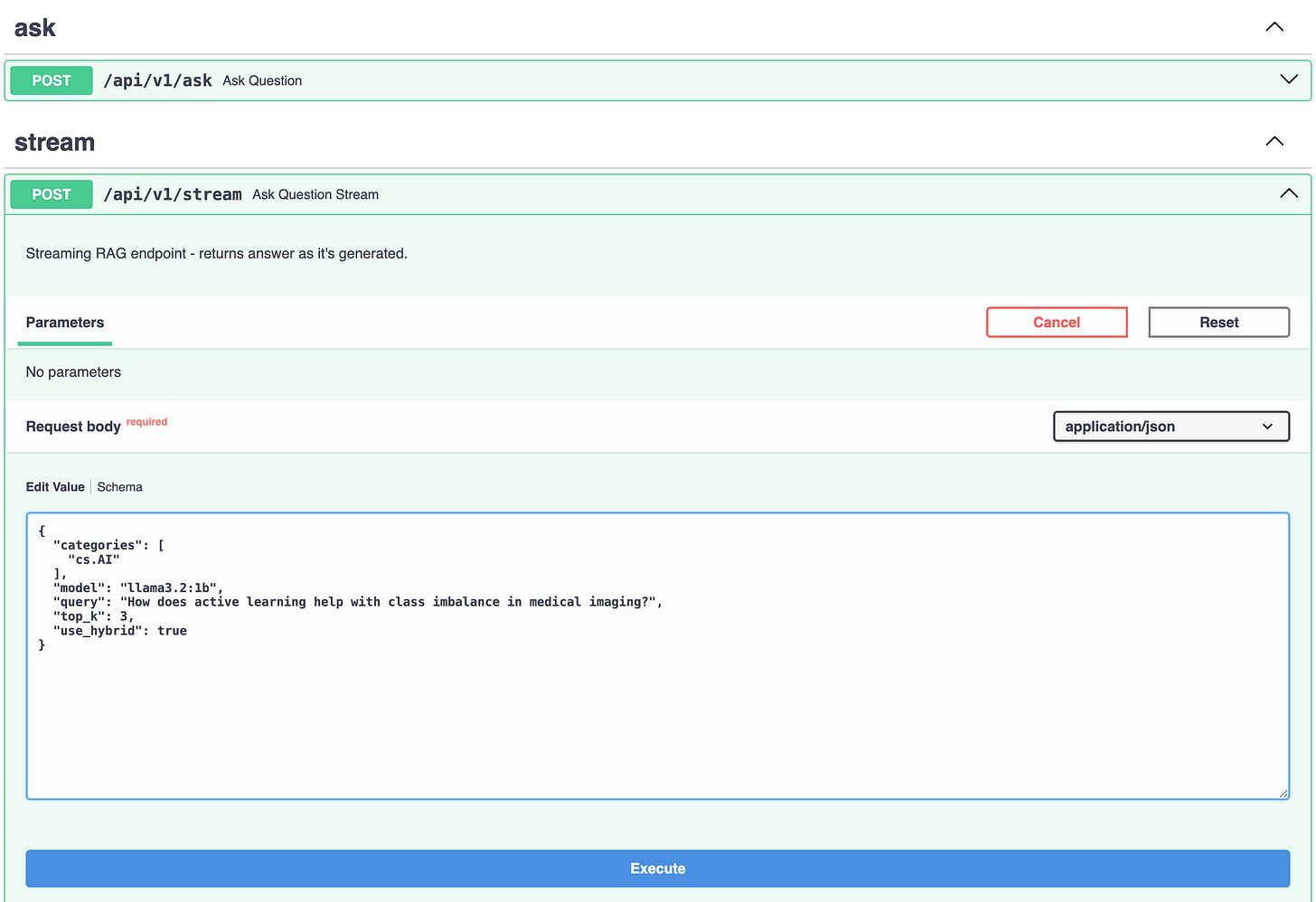

The dual API design

Not every use case needs the same response pattern. We built two endpoints optimized for different scenarios:

Standard RAG endpoint (/api/v1/ask)

Perfect for API integrations, batch processing, and when you need complete responses with metadata:

@ask_router.post("/ask", response_model=AskResponse)

async def ask_question(

request: AskRequest,

opensearch_client: OpenSearchDep,

embeddings_service: EmbeddingsDep,

ollama_client: OllamaDep,

) -> AskResponse:

"""

Complete RAG response with sources and metadata.

"""

chunks, sources, search_mode = await _prepare_chunks_and_sources(

request, opensearch_client, embeddings_service

)

response = await ollama_client.generate_rag_response(

query=request.query,

chunks=chunks,

model=request.model

)

return AskResponse(

query=request.query,

answer=response["answer"],

sources=sources,

confidence=response["confidence"],

search_mode=search_mode

)Streaming endpoint (/api/v1/stream)

Built for interactive UIs where time-to-first-token matters more than complete responses:

@stream_router.post("/stream")

async def stream_question(

request: AskRequest,

opensearch_client: OpenSearchDep,

embeddings_service: EmbeddingsDep,

ollama_client: OllamaDep,

):

"""

Stream RAG response via Server-Sent Events.

Provides real-time token generation

"""

async def generate():

# Send metadata immediately

yield f"data: {json.dumps({'sources': sources})}\n\n"

# Stream tokens as they arrive

async for token in ollama_client.generate_rag_stream(

query=request.query,

chunks=chunks,

model=request.model

):

yield f"data: {json.dumps({'token': token})}\n\n"

return StreamingResponse(

generate(),

media_type="text/event-stream"

)Real-world example:

Let's trace a query through the complete system:

1. Query arrives at API

{

"query": "How does active learning help with class imbalance in medical imaging?",

"top_k": 3,

"use_hybrid": true,

"model": "llama3.2:1b",

"categories": ["cs.AI"]

}2. Hybrid search retrieves chunks

BM25 finds: Papers with exact term "transformers"

Vector search finds: Papers about attention mechanisms, self-attention

RRF fusion: Combines both for best results

3. Minimal context preparation

chunks = [

{

"arxiv_id": "2408.15231",

"chunk_text": "Deep Active Learning for Lung Disease Severity

Classification addresses the challenge of

with limited labeled data. Our approach..."

},

{

"arxiv_id": "2408.15231",

"chunk_text": "To handle severe class imbalance in lung disease

datasets, we employ a combination..."

},

{

"arxiv_id": "2107.03463",

"chunk_text": "Active learning in medical imaging reduces

burden by iteratively selecting ..."

}

4. Prompt template construction

The system creates a focused prompt by combining:

# System prompt (from rag_system.txt)

system_prompt = """You are an AI assistant specialized in answering questions about

academic papers from arXiv. Base your answer STRICTLY on the provided paper excerpts.

LIMIT YOUR RESPONSE TO 300 WORDS MAXIMUM."""

# Context from chunks

context = """### Context from Papers:

[1. arXiv:2408.15231]

Deep Active Learning for Lung Disease Severity Classification addresses the challenge...

[2. arXiv:2408.15231]

To handle severe class imbalance in lung disease datasets, we employ a combination...

[3. arXiv:2107.03463]

Active learning in medical imaging reduces annotation burden by iteratively selecting..."""

# User question

question = "How does active learning help with class imbalance in medical imaging?"

# Final prompt sent to Ollama

final_prompt = f"{system_prompt}\n\n{context}\n\n### Question:\n{question}\n\n### Answer (cite sources using [arXiv:id] format):"

The clean, structured prompt ensures the LLM focuses on the relevant content without distractions.

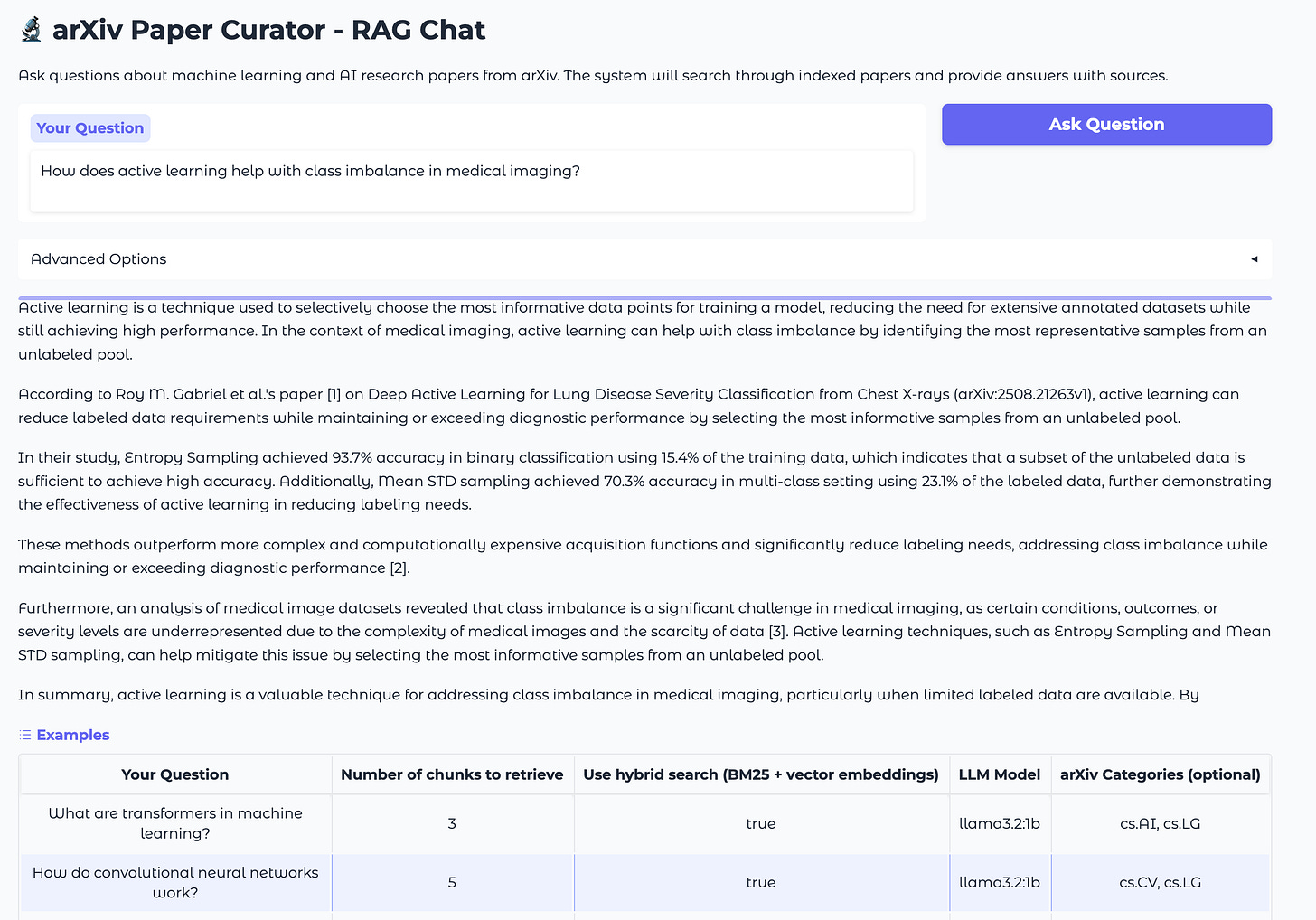

5. LLM generates response

Active learning helps address class imbalance in medical imaging through

strategic sample selection and efficient use of limited annotations.

In the context of lung disease classification from chest X-rays

(arXiv:2408.15231), active learning reduces labeling requirements by 60%

while maintaining diagnostic accuracy. The approach uses uncertainty

sampling to identify the most....6. Response includes sources

{

"answer": "[above text]",

"sources": [

"https://arxiv.org/pdf/2408.15231.pdf",

"https://arxiv.org/pdf/2107.03463.pdf"

],

"confidence": "high",

"search_mode": "hybrid"

}The Gradio interface

We built an interactive web UI that makes testing and demonstrating the RAG system effortless:

# gradio_launcher.py

import gradio as gr

from src.gradio_app import create_interface

if __name__ == "__main__":

interface = create_interface()

interface.launch(

server_name="0.0.0.0",

server_port=7861,

share=False

)Features:

Real-time streaming: See tokens appear as they're generated

Source citations: Click to open PDF sources

Search mode toggle: Compare BM25 vs hybrid results

Category filtering: Focus on specific arXiv categories

Response timing: Track performance for different configurations

Access it at http://localhost:7861 after running:

uv run python gradio_launcher.py

Building on weeks 1-4

Our complete RAG system seamlessly integrates everything we've built:

Week 1 infrastructure: Docker containers now include Ollama service

Week 2 data pipeline: Papers flow automatically into searchable chunks

Week 3 BM25 search: Forms the keyword component of retrieval

Week 4 hybrid search: Provides semantic understanding for better results

Week 5 generation: Completes the loop with optimized LLM responses

The compound effect: Because we built proper abstractions from the start, adding LLM generation was mostly about optimization and interface design, not complex integration work.

Production configuration

Model selection based on hardware:

ModelRAM RequiredQualityUse Casellama3.2:1b2GBGoodDefault, demosllama3.2:3b4GBBetterProductionphi3:mini2GBGoodAlternativemistral:7b8GBBestHigh quality

Environment configuration:

# .env

OLLAMA_HOST=http://ollama:11434

OLLAMA__DEFAULT_MODEL=llama3.2:1b

OLLAMA__TIMEOUT=120

OLLAMA__MAX_TOKENS=300

# Performance tuning

OPENSEARCH__MAX_TEXT_SIZE=1000000

RAG__TOP_K_DEFAULT=3

RAG__USE_HYBRID_DEFAULT=true

Docker resource allocation:

# docker-compose.yml

ollama:

image: ollama/ollama:latest

deploy:

resources:

limits:

memory: 4G

reservations:

memory: 2G

Configuration options

We tested various configurations to find the sweet spot.

Key findings:

top_k=3provides the best balance of context and qualityHybrid search significantly improves relevance

Streaming improves perceived responsiveness

Always best to use streaming for Time to first token

Code and resources

📓 Code location: https://github.com/jamwithai/arxiv-paper-curator

📓 Interactive Tutorial: notebooks/week5/week5_complete_rag_system.ipynb

Complete walkthrough of the RAG pipeline

Performance comparisons between configurations

Troubleshooting guide for common issues

Examples with real arXiv papers

📁 Key Files:

src/routers/ask.py- RAG API endpoints (standard and streaming)src/services/ollama/client.py- Ollama integration with streamingsrc/services/ollama/prompts/- Optimized prompt templatessrc/gradio_app.py- Interactive web interfacegradio_launcher.py- Simple launcher script

📚 Documentation:

Week 5 README: notebooks/week5/README.md

API Documentation: http://localhost:8000/docs

Gradio Interface: http://localhost:7861

Previous weeks:

Week 2: Bringing Your RAG System to Life

Verifying everything works

Prerequisites:

Start services:

docker compose up --build -dPull LLM model:

docker exec rag-ollama ollama pull llama3.2:1bSet embeddings key: Add JINA_API_KEY to .env (get free at https://jina.ai/embeddings/)

Run ingestion: Trigger Airflow DAG to populate data

Testing the implementation

Want to see it all in action? Three ways to test:

1. API Testing

# Standard endpoint

curl -X POST "http://localhost:8000/api/v1/ask" \

-H "Content-Type: application/json" \

-d '{"query": "What are transformers?", "top_k": 3}'

# Streaming endpoint

curl -X POST "http://localhost:8000/api/v1/stream" \

-H "Content-Type: application/json" \

-d '{"query": "Explain attention mechanism", "top_k": 2}' \

--no-buffer2. Gradio Interface

uv run python gradio_launcher.py

# Open http://localhost:78613. Interactive Notebook

uv run jupyter notebook notebooks/week5/week5_complete_rag_system.ipynbThe notebook includes:

Complete setup verification with health checks

Step-by-step testing of RAG pipeline

Performance comparisons across different configurations

Streaming vs standard endpoint

Real examples with actual papers

What's next (Week 6)

Next week we add the finishing touches for production deployment:

Langfuse integration for complete observability

Request tracing from question to answer

Caching layer for common queries

Production deployment configurations

Follow Along: This is Week 5 of 6 in our Zero to RAG series. Every Thursday, we release new content, code, and notebooks.

Let's go 💪

How are you checking for hallucinations?

Wait all of this was actually built? I have ollama. Is the link above where I start to try and rebuild myself? Or is the start somewhere else?